We are familiar with Sigmoid function when learning Logestic regrestion, as well as in Neural Network as the non-linear active function.

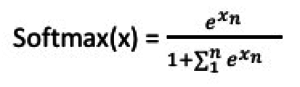

Softmax function is another type of non-linear function

While Sigmoid is as the following in math,

![]()

How to separate them in usage? Here is a good explanation.

Also some good example for Softmax in Wiki

If we take an input of [1, 2, 3, 4, 1, 2, 3], the softmax of that is [0.024, 0.064, 0.175, 0.475, 0.024, 0.064, 0.175]. The output has most of its weight where the ‘4’ was in the original input. This is what the function is normally used for: to highlight the largest values and suppress values which are significantly below the maximum value…